Twitter is the latest platform to ban a new type of pornographic video that replaces the original actor’s face with that of another person.

The clips, known as deepfakes, typically use the features of female TV personalities, actors and pop singers.

Unlike some social networks, Twitter allows hardcore pornography on its platform.

But it said deepfakes broke its rules against intimate images that featured a subject without their consent.

The San Francisco-based company acted six hours after a Twitter account dedicated to publishing deepfake clips was publicised on a Reddit forum.

News site Motherboard was first to report the development.

“We will suspend any account we identify as the original poster of intimate media that has been produced or distributed without the subject’s consent,” Twitter told Motherboard.

It added that “deepfakes fall solidly” within the type of clips banned by its intimate media policy.

The development followed an announcement by Pornhub that it too would remove deepfake clips brought to its attention.

Until now, the adult site had been a popular source for the material, with some deepfake videos attracting tens of thousands of views.

Video-hosting service Gfycat and chat service Discord had already taken similar action.

Simple software

Deepfakes involve the use of artificial intelligence software to create a computer-generated version of a subject’s face that closely matches the original expressions of another person in a video.

To do this, the algorithm involved requires a selection of photos of the subject’s face taken from different angles.

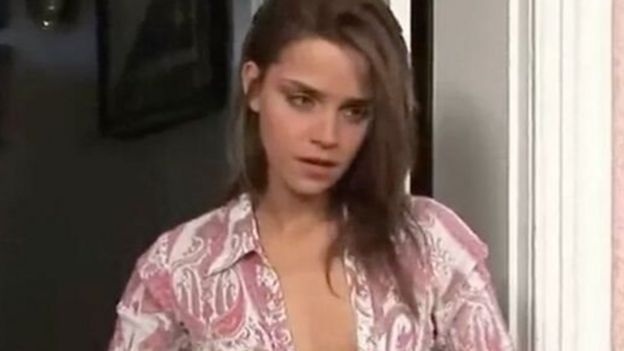

In cases where the two people involved have similar body types, the results can be quite convincing.

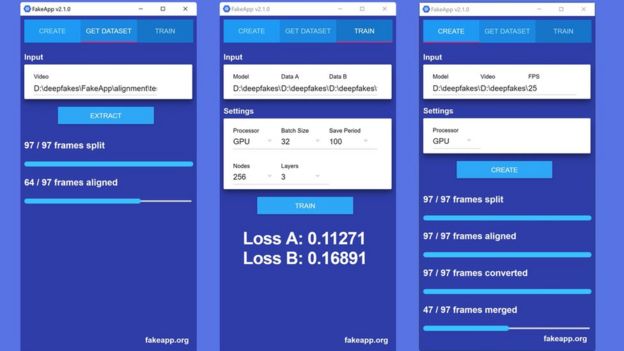

The practice began last year, but became more common in January following the release of FakeApp – a tool that automates the process.

It requires only a single button click once the source material has been provided.

One Reddit group dedicated to sharing clips and comments about the process now has more than 91,000 subscribers.

Child abuse

Not all of the clips generated have been pornographic in nature – many feature spoofs of US President Donald Trump, and one user has specialised in placing his wife’s face in Hollywood film scenes.

But most of the material generated to date appears to be explicit.

While the vast majority of these feature mainstream celebrities, some users have begun generating clips using the faces of YouTube personalities in recent days, a move that has proved controversial with others.

In addition, there has been a backlash following reports that some people had created illegal child abuse imagery by using photos of under-16s.

“I saw one… of some little girl from a TV show and told the original poster to take it down,” reported one Reddit member.

Users are also being warned not to try to buy or sell specially commissioned deepfake videos among themselves.

Motherboard revealed one advert for such a service earlier this week, noting that any commercial activity could put those involved at risk of being prosecuted.

More realistic

Some of the clips being created already rival the quality of face-swapping special effects used in films, including Rogue One and Blade Runner 2049.

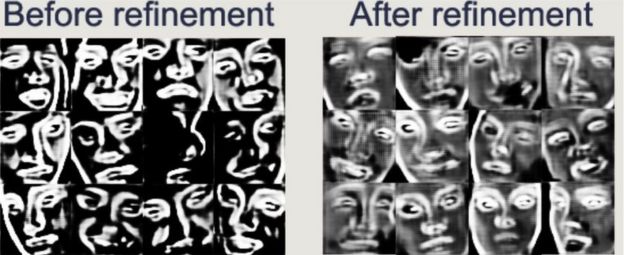

And efforts are being made to make further improvements.

A page on the Github code-sharing site reveals that work is being done to make eyeball movements “more realistic and consistent” with those of the person featured in the original footage.

In addition, attempts are being made to:

- avoid a jittering effect in some clips

- prevent graphical artefacts appearing where the computer-generated face merges with the original body

- produce more natural skin tones

Some users have also begun exploring the use of Amazon Web Services and Google Cloud Platform as an alternative to trying to generate the clips on their own computers.

Such a move has the potential to make it possible to generate longer videos without having to wait days, but would involve having to pay the services a fee for their processing efforts.

–

Source: BBC